This issue in pdf Subscription Archive: Next issue: October 2005 |

|

|||||||

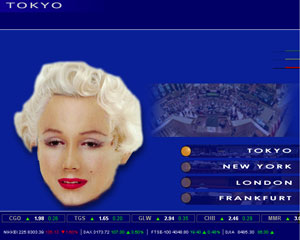

Merging Virtual Reality and Television through Interactive Virtual Actors Marilyn - Multimodal Avatar Responsive Live Newscasterby Sepideh Chakaveh A recent and innovative research activity of the ERCIM E-Learning Working Group and the Fraunhofer Institute for Media Communication (IMK) merges virtual reality, artificial intelligence and television. In recent years we have seen the Internet and television come closer in many ways. This began when streaming technology gradually opened the way to watching television programs on the Internet. IMK-ITV is now considering reversing this process, that is, implementing Internet-type applications on television (TV). Moreover, interactive TV (iTV) aims to combine traditional TV with additional services previously only available on the Internet. This is leading to the disappearance of the boundaries between two forms of content, namely infotainment and edutainment. The development of iTV depends on several factors. The first of these is the development of the iTV production chain. This involves producing media content (ie a TV program), broadcasting it on a particular channel and receiving it using a TV view system. The second is the development of both the medium and paradigm of interactivity with iTV. So far, interaction with TV content is still limited, with ‘clickable’ interactivity as the sole paradigm of interaction, and the use of remote controls as the sole medium of interaction. The third factor is the development of artifical intelligence (AI) techniques that serve an intelligent iTV (IiTV) platform. To this end, speech recognition, natural language understanding and decision support systems should be deployed in the development of (IiTV). This has enormous potential, as the number of Internet users, while rapidly increasing, is still small compared to television users. Marilyn (Multimodal Avatar Responsive Live Newscaster) is a new system for interactive television, where a virtual reality three-dimensional facial avatar responds to the remote control in real time, speaking to the viewer and providing the requested information. Marilyn informs the viewer with a click of a button on daily financial news. Here the focus is on the provision of choice as well as personalization of information in an entertaining manner. As well as offering live financial data from leading stock exchanges such as New York, London, Frankfurt and Tokyo, multilingual aspects of the information are also catered for. Traditionally, financial news has been regarded as content-based and rather rigid in format. In contrast, the edutainment aspects of Marilyn can make such a program entertaining as well as informative. Figure 1 shows a schematic of the structure of Marilyn. The application consists of three stand-alone but interrelated sections.

Financial Content 3D Facial Avatar

The avatar may of course have any other face mapped to it, but, owing to the different bone structures of male and female faces, the sex of the facial avatar is pre-defined. This means the mesh structure for the avatar must be created in advance for a male or female presenter. Equally, the voice of a male or a female speaker should be defined, as well as the desired language or accent. For example, if Marilyn reads the news in English, then information provided from the London stock exchange could be read with a British accent, or that from the New York stock exchange with an American accent. In addition, the financial data from the Frankfurt stock exchange can be read in German. User Interface This project has been supported by Dr. Soha Maad, an ERCIM fellow, hosted by the Institute for Media Communi-cations for 18 months. Please contact: |

|||||||