|

ERCIM News No.50, July 2002

|

Parallel Direct Solvers for Large Sparse Linear Systems

by Iain Duff and Jennifer A. Scott

In recent years, there has been an increasing demand for the efficient solution of larger and larger linear systems of equations. One way of doing this is through the development of numerical algorithms and software for parallel computers. The Numerical Analysis Group at Rutherford Appleton Laboratory is using an approach that allows to take advantage of existing expertise and experience of direct solvers to design and develop parallel solvers that offer significant speedups when used on computers with a modest number of processors.

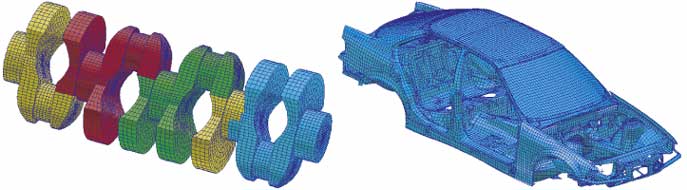

In many industrial applications, such as the analysis of very large structures, industrial processing of complex non-Newtonian liquids, and the simulation of car bodies, the solution of large, sparse linear systems of equations is the single most computationally expensive step. Thus, any reduction in the linear system solution time will result in a significant saving in the total simulation time. As time-dependent three-dimensional simulations are now commonplace, the size of the linear systems that need to be solved is becoming ever larger, fueling the demand for algorithms and software that can be used on parallel supercomputers. Parallel computers, in addition to providing possible speedup through efficient parallel execution, are also a means for obtaining more memory for direct factorization techniques, potentially enabling the solution of problems that would otherwise be intractable.

|

| A crankshaft and a car body from the automative industry that are modelled by MacNeal-Schwendler as finite element problems. The problems have 1848,770 and 227,362 unknowns, respectively. By courtesy of Stefan Mayer, MSC Munich. |

The parallel approach that we propose is based upon first subdividing the problem into a (small) number of loosely connected subproblems by ordering the matrix to bordered block diagonal form. A direct solver is then applied in parallel to each of the subproblems. Once all possible eliminations for the subproblems have been performed, there remains an interface problem, which is much smaller than the original system. The interface solution is used to complete the solution of the subproblems.

This approach has significant advantages over attempting to design a general parallel sparse direct solver. Firstly, modern direct solvers are extremely complex codes and represent substantial programming effort by experts in sparse matrix technology. In HSL 2002, we have a number of serial sparse solvers that have been developed over many years and have proved to be very robust, reliable and efficient for a wide range of practical problems. We were therefore keen to exploit our expertise with these solvers when designing parallel software. Splitting the problem into subproblems and applying one of our direct solvers to each of the subproblems allows us to do this. A second important advantage of this approach is that each processor can be preassigned all the matrix data required for the computations it has to perform before the factorization starts. Communications are only required to send the data that remains when all possible eliminations for the subproblems have been performed to the processor responsible for factorizing the interface problem. Interprocessor communication is thus both limited and structured. Finally, any existing sparse direct solver may be used to solve the interface problem.

|

HSL Software Library

The software library HSL (formerly the Harwell Subroutine Library) is a collection of portable, fully documented Fortran packages that have been written and developed primarily by the Numerical Analysis Group at RAL. HSL has particular strengths in sparse matrix computations and large-scale optimization. HSL is marketed commercially by Hyprotech UK Ltd although there are special arrangements for licencing to academic users, and a collection of older codes from the Library are more freely available in the HSL Archive. HSL routines are regularly incorporated into advanced software applications, including chemical engineering and finite-element modelling. |

Unfortunately, the factorization of the subproblems cannot be performed using an existing direct solver without some modifications. This is because standard solvers are designed to factorize the whole of the system matrix using a variant of Gaussian elimination: all the columns are eliminated in turn and the factors computed using an ordering chosen by the solver. But when applied to a subproblem that is connected to one or more other subproblems, the columns with entries in more than one subproblem cannot be eliminated. Modifications are thus needed to enable a distinction to be made between columns internal to the subproblem and those that must be passed to the interface problem.

Our work in this area began with the development of parallel frontal solvers. In recent years, we have designed and developed three codes: the first is for unsymmetric finite-element problems; the second is for symmetric positive definite finite-element problems; and the third is for highly unsymmetric linear systems such as those that arise in chemical process engineering. The codes are written in Fortran 90 and use MPI for message passing. Fortran 90 was chosen not only for its efficiency for scientific computation but also because of the features it offers. In particular, our software makes extensive use of dynamic memory allocation and this allows a much cleaner user interface. MPI is used because it is widely available and accepted by users of parallel computers. Our software does not assume that there is a single file system that can be accessed by all the processes. Thus it can be used on distributed memory parallel computers as well as on shared memory machines. Options exist for holding the matrix factors in files on disc, thus allowing the codes not only to solve very large problems but also to be used on parallel machines where each processor has access only to a limited amount of main memory.

Our parallel frontal solvers have been tested on a number of very different computing platforms, including an SGI Origin 2000, a cluster of Sun workstations, a 2-processor Compaq DS20, and a Cray T3E. For problems arising from chemical process engineering, the parallel frontal solver has been found to be significantly faster than a serial frontal code, with speedups in the range of 3 to 8 being reported on 8 processors of an Origin 2000.

Following the success of our parallel frontal solvers, we are currently working on the development of a parallel version of the well known general unsymmetric sparse direct solver MA48. This code is suitable for solving very sparse, highly unsymmetric problems and is particularly efficient when solving repeatedly for different right-hand sides. The new parallel code adopts the same design criteria as the parallel frontal solvers and, in particular, is portable, straightforward to use, efficient, and, through the range of options available to the user, flexible.

We remark that, although highly portable, our parallel solvers are only suitable for use on a modest number of processors (typically up to about 16). The reason for this limit is that, as the number of blocks in the block diagonal form increases, so too, in general, does the size of the interface problem. In our parallel solver, the interface problem is currently solved using a single processor. This presents a potential bottleneck and limits the speedups that can be achieved.

Please contact:

Iain S. Duff, CLRC

E-mail: I.Duff@rl.ac.uk

Tel: +44 1235 445803

Jennifer A. Scott, CLRC

E-mail: J.A.Scott@rl.ac.uk

Tel: +44 1235 445132