|

|

ERCIM News No.46, July 2001 [contents]

|

by Pavel Zikovky and Pavel Slavík

Synthesizing the movement of an artificial face has not only the role of ‘feeling’ for the user, it also transfers information. Moreover, it is proved that a whole speech can be recognized from the movement of speaker’s lips (for example, by deaf people). So, applying bimodality (visual and audible) to speech signal increases the bandwidth of information transferable to the user.

The strong influence of visible speech is not limited to situations where auditory speech degradation occurs. Moreover, a perceiver’s recognition of sound depends on visual information. For example, when the auditory syllable /ba/ is dubbed onto video face saying /ga/, the subject perceives the speaker to be saying /ga/. Another benefit of facial animation is that the only information transmitted over the Internet is textual, and therefore low-sized and fast. This text is input to speech and visual engines on client side.

Lips animation

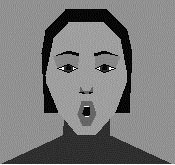

Lips animation is done by setting the face to the position of the appropriate viseme (viseme corresponds to phoneme) and then morphing to the following one. The viseme parameter allows choosing from a set of visemes independently from the other parameters, thus allowing viseme rendering without having to express them in terms of other parameters, or to affect the result of other parameters, insuring the correct rendering of visemes.

|

| Viseme ‘o’. |

Adapting Face Presentation to Different Platforms

As users can access discussion (chat) over the Internet, they can obviously access it from different HW and SW platforms. Moreover, the computers may have different display capabilities and computational power. So, aiming to provide optimal solutions on a variety of different computers, we will use different algorithms for displaying the face. For example, a face displayed on a handheld device that has slow processor and low-resolution display will be displayed as a simple 2-D model, while on a well-equipped PC the same face will be displayed in a full color 3-D model. The following explains how displaying will be changed and divided across platforms and devices.

The advantage of handheld (such as Palmtops, Psions etc.) devices is that they can be carried anywhere by anyone, eg without the need of external power and any special skill. But nowadays they are usually not equipped with appropriate displays and computational power to display any complicated graphics. So, for those devices simple 2D drawing is the best solution. As can be seen on Figure 1, simplifying the face in 2D set of triangles can be quite meaningful. The disadvantage of this ‘oversimplifying’ is that it cannot express the position of the participant in space. On the other hand, it can be very useful while used on handheld devices as simple autonomous bimodal agent (such as a visitor’s guide).

When users access the system from a common low-end computer, notebook or high-end portable device, there is no need to reduce displayed information only to simple 2D polygons due to computational power. So, it is possible to use a simple polygonal 3D model of the head, which gives us additional informational space to use. It gives us the chance to give an expression of more users organized in a space, eg around the table, etc.

On high-tech devices we can do both an excellent 3D animation, which is only a better remake of a previous possibility, or excellent full-color 2D animation — such as television — which is our goal. The animation of the face is done by morphing real visemes (photographs of a real person) of the speech as it flows in real time. This is computationally expansive and requires a lot of memory, but gives a perfect video of a person speaking.

Conclusion

A research has been conducted about capabilities of possible devices, which lead us into creating three different clients for different types of machines and environments. There is the possibility to adapt the look of a face, and this can be used in multi-user environments. The simplest way of face representation on handheld devices gives us the possibility of creating simple portable bimodal interfaces. As the visual quality can be adapted according to computer and platform and the only transmitted information is text, it makes our attitude accessible within huge number of devices which are not capable of common videoconferencing. While thinking about real bimodal chat over the Internet, we have used Jabber (www.jabber.org) as a network communication layer.

Please contact:

Pavel Zikovky and Pavel Slavík — Czech Technical University, Prague

Tel: +420 2 2435 7617

E-mail: xzikovsk@sun.felk.cvut.cz, slavik@cs.felk.cvut.cz