|

|

ERCIM News No.46, July 2001 [contents]

|

by Christoph Wick and Stefan Pieper

Efficient navigation of three-dimensional image data requires visualisation and interaction techniques derived from and suitable for the tasks. Scientists at the GMD Institute for Applied Information Technology (FIT) present two examples for a design principle that improves navigation in complex spatial environments. This approach is called constraint-based navigation in three-dimensional scenes.

Medical informatics often deals with volume data sets acquired by CT, MRI or 3-D ultrasound scanners. In the field of interactive computer graphics, a lot of research has been conducted on generic visualisation techniques such as volume and surface rendering. Interactive computer graphics have also provided basic mechanisms for navigation in such data sets with 2-D pointing devices. Various examples can be found in the Open Inventor library or in VRML viewers.

With the EchoCom application we have introduced a tool to explore 3D cardiac ultrasound volumes and to train the handling of an ultrasound transducer. To be usable as a web-based application, an appropriate mouse interaction technique had to be introduced. A major design goal of the interface was to provide something that resembles the handling of a real transducer and gives maximum orientation in the complex spatial environment. Common 3D interaction tools like a virtual trackball or CAD-like interfaces proved to be counter-intuitive and require a remarkable learning effort from the user.

Our analysis of traditional training in echocardiography showed that transducer handling is always a two-step process: depending on the desired view the transducer is first positioned on one of a few standard points on the patient’s chest. After that the transducer is swept, rotated, and tilted until an optimal view is reached. With this in mind, we designed an interface to navigate with a virtual ultrasound transducer as ‘naturally’ as possible.

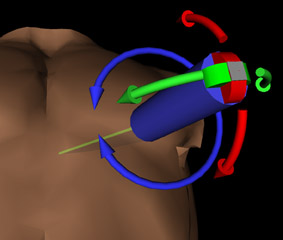

A surface model of the thorax serves as a reference scenario. If the user clicks on a spot on the thorax model, the tip of a virtual transducer jumps to that spot and positions itself perpendicularly to the thorax. If the user drags the mouse from here, it follows the mouse pointer. The transducer tip never loses contact with the surface and thus no meaningless positions are achieved. Another option for setting the transducer’s initial position is to select one of the standard views defined in echocardiography and continue exploration from there. Apart from moving the transducer over the chest the user can sweep, tilt, and rotate the transducer in a second mode. For that purpose, three intuitive and easily accessible areas on the transducer handle can be ‘grasped’ with the mouse. Each area lets the user perform one of the three basic orientation operations (see figure).

|

| The virtual transducer is positioned on the thorax model and can be swept, tilted, and rotated by dragging at the three touch-sensitive areas (blue, red, and green). |

Initial informal evaluations with both novice and trained cardiologists, some familiar and some unfamiliar with 3D interfaces, have shown that such a specialised, constrained interface is easy to use, intuitive, and suitable for the task.

In the TriTex project another application is about to be developed as a visualisation tool for 3D medical and geologic data. These data originate from medical 3D image scanners or seismic surveys mapping earth’s sub-surface structures. Because of the data’s complexity, manual, slice-by-slice analysis results in very time-consuming and error-prone work. In order to facilitate the manual interpretation, an automated texture analysis component processes the three-dimensional data sets and identifies sub-volumes of homogeneous structural qualities, thus classifying and segmenting the raw data.

The analysis results can be used as an augmentation in a mixed-reality visualisation. However, only with an adequate navigation mechanism will this augmentation guide the user to a more efficient exploration. The key concept to make use of is the relevance of certain border surfaces and their enclosed or adjacent volumetric structures. Thus, the navigation can concentrate on letting the user pick bodies and surfaces of interest and, taking the picked object as a reference structure, allowing him to explore the immediate vicinity.

At a planar border surface as reference structure, for example, the view can be set to a perpendicular cross-section and the navigation can be constrained to translations along this surface. Certain spherical structures can be explored through diametric cross-sections, thus reducing the navigation to only the rotational parameter.

Both examples share a common design principle: efficient navigation in complex environments, such as three-dimensional image data, requires visualisation and interaction techniques derived from and suitable for the tasks. Generic navigation techniques must be constrained to reduce complexity in interpretation of data as well as in interaction with it. Abstract reference structures - standardised, registered models or objects automatically extracted from the raw data - provide spatial constraints, while the remaining degrees of navigational freedom are determined by requirements to visualise proximal visual contents simultaneously. Constraint-based navigation proved to be a successful tool for different applications that were designed to address highly specialised needs. However, to develop an adequate constraint-based navigation scheme it is always necessary to perform an in-depth field analysis. One result of this analysis will be an exact understanding of how information has to be visualised and how reproducible manipulation of views can be accomplished.

Link:

GMD Institute for Applied Information Technology: http://fit.gmd.de

Please contact:

Jürgen Marock — GMD

Tel:+49 2241 14-2155

E-mail: juergen.marock@gmd.de