|

|

ERCIM News No.46, July 2001 [contents]

|

by Atid Shamaie, Wu Hai and Alistair Sutherland

The goal of this project in the School of Computer Applications at Dublin City University is to develop a system to recognize hand gestures from real-time video images, even in the case of occlusion. Our system uses a multi-scale approach, Principal Component Analysis (PCA) and graph matching.

The main applications of gesture recognition are communicative (eg Sign Language recognition) and manipulative (eg controlling robots without any physical contact between human and computer). Irish Sign Language (ISL) is the native language of the Irish deaf community and contains thousands of gestures. Each gesture consists of a specific hand-shape and a specific hand-motion (see Figure 1).

Hand-shape recognition

First we gather a data set of all the hand-shapes we wish to recognise. A naive approach to recognizing a new image D would be to simply compare it with all the images stored in the data set and find the target image T with the closest match. But because there are so many images in the data set this will take far too long. We can reduce the time by using a multi-scale approach. We divide up the data set into groups of images, which are similar to one another by blurring the images at different levels so that small differences between similar images will be eroded. Thus a whole group of original images may become reduced to just one image, which represents the entire group (see Figure 2).

|

|

| Figure 1: The hand on the left is ‘a’ in ISL. The right one is ‘z’, which includes movement. | Figure 2: Blurring the image at different levels. |

As a first step, we need to search only those images, which remain distinguishable after blurring and find the target image T1 which matches D best. As a second step, we reduce the blurring slightly and then search only those images in the group represented by T1. We find the image T2 which matches D best. We then reduce the blurring still further and search only those images represented by T2. We find the next target T3, and so on, until the blurring has been reduced to zero. Suppose we can search 10 images at each step. It takes only 4 steps to find the best match out of 10000 images.

Hand-motion recognition

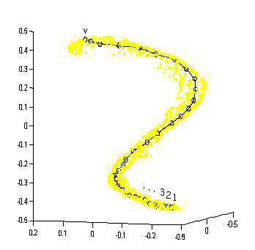

PCA is a technique which allows us to represent images as points in a low-dimensional space. If each image is composed of 32x32 pixels whose values vary from 0 to 255, then each image defines a point in 1024 dimensional space. If we grab a sequence of images representing a gesture then this sequence will generate a sequence of points in space. However, this set of points will usually lie on a low-dimensional sub-space within the global 1024D space. The PCA algorithm allows us to find this sub-space, which usually consists of up to three dimensions. This allows us to visualise the sequence of points representing the gesture (Figure 3). We can represent this sequence of points by a graph. We can find a different sub-space and graph for each gesture in the vocabulary of our system.

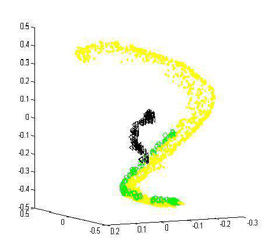

In the recognition phase we capture an image sequence of a new gesture and try to classify it as one of the known gestures in the systemís vocabulary. We project the new gesture into each of the sub-spaces and compare the graph of the new gesture to the graphs of the known gestures. We have developed a graph-matching algorithm that finds the best match based on finding the shortest edges of a complete bipartite graph (see Figure 4).

|

|

| Figure 3: A graph representing a gesture in a subspace. | Figure 4: Projection of two different new gestures into a subspace. The green graph matches the known graph (yellow) but the black one is not. |

One of the advantages of this algorithm is its ability to recognise partially-occluded hand gestures. Even if there is a gap in the new gesture sequence due to temporary occlusion it is still possible to compute a match. In addition, it is possible to identify the start and end points of the new gesture. It is even possible to use this algorithm when the gesture is made in reverse temporal order, since the graphs are the same in each case.

Future Work

Since speed is crucial in this application we may have to parallelise our algorithm if the number of gestures gets large. This algorithm is inherently parallel at different levels. Not only projecting the unknown input gesture into n subspaces and forming n graphs could be distributed to several processing units but the graph matching algorithm could also be run on several processing elements to reduce the running time.

The phenomenon of ‘co-articulation’, in which one gesture influences the next in a temporal sequence, is very important in fluent Sign language. Recognition of co-articulated gestures is one of the hardest parts of gesture recognition. We believe our graph-matching algorithm can be adapted quite naturally to cope with this problem. Finally detection of several gestures connected sequentially in a sentence and understanding of the whole sentence is ultimate goal of this research.

Link:

http://www.compapp.dcu.ie/~ashamaie/mvg

Please contact:

Alistair Sutherland — Dublin City University, Ireland

Tel: +353(1) 7005511

E-mail: alistair@compapp.dcu.ie