ERCIM News No.44 - January 2001 [contents]

![]()

ERCIM News No.44 - January 2001 [contents]

by Lakshmi Sastry, Gavin Doherty and Michael Wilson

The two major directions for interactive computer graphics research at Rutherford Appleton Laboratory are interaction with the graphical representation (how can users manipulate graphical representation in real time to gain insight, learn, to reach decisions, or to enjoy a game) and to establish a development methodology for computer graphics – as one would at any other piece of software engineering; considering efficiency and quality measures.

Earlier research tried to computerise what was very difficult for humans, and leave the rest to people since they were more plentiful and cheaper. Today, application designers take the reverse view: once the data is in the machine, leave the whole process to the application except when a human is absolutely required (for legal authorisation etc..), since machines are cheap and humans are expensive. This has a dramatic effect on interaction between the user and the system. Where the interaction used to be there to allow the user as much control and awareness of the process as possible, now the interaction is only required when there is something that that the machine can’t do. Then the machine must present the state that it is in to the user for them to take on their role - which they must clearly understand.

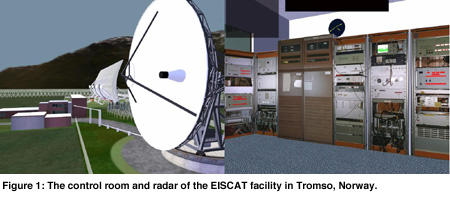

In applications such as simulations for training we need to provide an environment that mimics reality sufficiently to allow learning in the simulation and promote transfer of that learning to the real environment. In an example such as the EISCAT radar control room (see figure 1) we need to simulate the appearance of the radar, the building and control machines to provide visual cues, as well as the behaviour of the devices for interaction with the radar, and also appropriate feedback. When users push switches, or pull leavers they not only need to learn the sequence of abstract operations and their consequences for the radar and control system, but they need to move their hands and body in such a way that the physical control sequences they learn can be transferred to the real control room. They also need feedback to their actions through sight, sound and eventually touch to reinforce this experience.

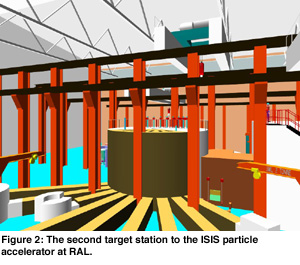

Another application class that we develop to support scientists is that of building and experiment prototyping and design review. In this case a new experiment such as a particle accelerator target station may be being designed, where the building, main accelerator, target, and each of 20 experiments may each be designed by different design teams in universities around Europe. We can collect and integrate their designs into a single model, but then we need to get the designers to see where conflicts arise. For example, where the particle beam passes through a support pillar in the building - clearly impossible in the real world. To do this we need to use the graphics to facilitate group exploration of the overall design, and decision making among the designers. We currently do this in a 20 seat auditorium providing 3D stereo presentation for all, while one user controls the navigation and interaction with the model.

In both these examples we require a clear set of requirements of the geometry, appearance, behaviour and interaction required of the graphics in order to achieve the task objectives, and a methodology to implement and evaluate that the requirements have been met. In one current project with York and Bath universities that has been previously reported in ERCIM News (April 2000, page 49) we have started to develop such a methodology. A second project is developing a reference model for characterising continuous interaction techniques (see ERCIM News, January 2000, page 21) with Grenoble, Parma, Sheffield and Bath, universities, and DFKI in Germany, Forth in Greece and CNR in Italy. Despite this current activity, a great deal of further work is required to incorporate mappings from requirements on learning or group decision making down to implementable and evaluatable geometry, appearance, behaviours, interactions and feedback for sight, sound and touch that use the skills that humans have and machine’s don’t, and even won’t given Moore’s law.

Links:

W3C Scaleable Vector Graphics (SVG): http://www.w3.org/Graphics/SVG/Overview.htm8

ISIS second target station: http://www.isis.rl.ac.uk/targetstation2/

EISCAT radar facility: http://www.eiscat.no/eiscat.html

INQUISITIVE project: http://www.cs.york.ac.uk/hci/inquisitive/

TACIT Project: http://kazan.cnuce.cnr.it/TACIT/TACIThome.html

Please contact:

Michael Wilson - CLRC

Tel: +44 1235 44 6619

E-mail: m.d.wilson@rl.ac.uk