ERCIM News No.44 - January 2001 [contents]

![]()

ERCIM News No.44 - January 2001 [contents]

by Wolfgang Broll

While people are used to interact in their natural three-dimensional environment, they often face difficulties to work efficiently using 3D applications. This especially applies for unskilled or inexperienced users. The Virtual Round Table is a new innovative collaboration environment enhancing the user’s workspace by virtual 3D objects. It provides a basis for natural 3D interaction by using real world items as tangible interfaces for virtual world objects. Thus intuitive collaboration between multiple participants is supported without sustaining existing communication skills.

In order to give a large number of people access to 3D technology to improve their daily working environment, interacting in a 3D environment should be as intuitive as interacting within the real world. The Virtual Round Table tries to realise an environment, which provides the basis for intuitive collaboration. It extends the usersÇ physical environment by artificial 3D objects. This approach allows us to represent even complex dependencies within the common working environments providing the basis for a collaborative planning and presentation environment. The Virtual Round Table realises this environment using a location independent and inexpensive approach.

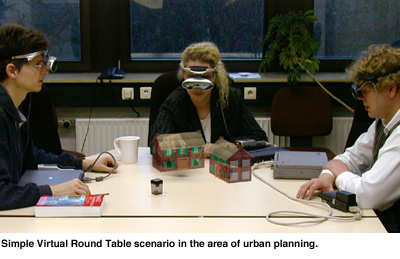

The Virtual Round Table allows all participants of a work group to stay and to act in their natural environment. Additionally familiar verbal communication and interaction are not restricted by the system. Intuitive interaction is a central aspect for the userÇs acceptance of the system. Combining real and virtual objects into a conceptional unit enables users to intuitively grasp and re-arrange virtual objects. Thus the Virtual Round Table emphasizes common collaboration and cooperation mechanisms within regular meeting situations and extends them by arbitrary virtual scenarios (see Figure).

Visualization

The visualization of a synthetic scene within a real world working area is realised by using see-through projection glasses. Thus each user receives an individual visual impression of the work space that includes both, physical as well as virtual objects. The visualization of an individually adapted stereoscopic view for each user is realized by the multi-user virtual reality toolkit SmallTool. In order to provide a seamless integration of the real and virtual world, the virtual view of each user has to be visualised according to his or her current real world location and viewing direction in real-time. This requires a continuous tracking of the userÇs head position and orientation. Due to the high sensitivity of the human vision system, the deviceÇs position and orientation detection mechanisms have to be highly accurate. Moreover, the device should be insusceptible against external ascendancies.

Our current prototype is realised on PCs with high-end stereo-capable graphics accelerators. User head tracking is provided by an InterSense Mark 2 tracking system. However, this tracking device does not fulfil the requirements of light-weight and non intrusive system. Thus a sourceless and wireless six degree of freedom inertial tracking device based on the existing MOVY prototype is developed.

Tangible Interfaces

In our approach we use real world items as natural tangible interfaces for 3D interactions. By associating manipulable virtual objects with arbitrary physical items, we create an intuitive interaction mechanism. This interaction mechanisms allows users to interact with virtual 3D objects similar to real world items. It is realised by directly mapping the itemsÇ position and orientation to the appropriate properties of the associated virtual object. The superimposition and synchronization of the real items with virtual objects leads to a fusion of the artificial object and its physical reference into a symbiotic unit. These interaction units allow users to interact on virtual objects directly and naturally.

This requires an additional mechanism in order to recognise and track the position and orientation of these real world items. Since we do not want to use designated representatives based on markers or attached sensors, we use a computer vision based approach. Real world items are tracked by analysing video images of head-mounted or fixed cameras. Our first approach is based on simple pattern and luminance detection. Future developments however, will use feature vectors for object recognition and localisation.

Application Areas

We currently evaluate the Virtual Round Table environment in the area of collaborative planning applications. This includes the planning of events such as concerts, fairs, theatre plays and product presentations. Other application areas are architectural design, urban planning, disaster management (eg coordinating action forces for forest fires) as well as cooperative simulation and learning environments.

Conclusions and Future Work

Natural interaction mechanisms are facilitated by using real items as tangible interfaces to virtual objects, without restricting the usersÇ common interaction and communication mechanisms. In our future work we will elaborate these interaction units and evaluate multi-modal interaction mechanisms. We will extend the inertial tracking device to support six-degrees-of-freedom and enhance the computer-vision based system.

Links:

Collaborative Virtual and Augmented Environments Group at GMD: http://fit.gmd.de/camelot

Please contact:

Wolfgang Broll - GMD

Tel: +49 2241 14 2715

E-mail: wolfgang.broll@gmd.de