ERCIM News No.43 - October 2000 [contents]

Searching Documentary Films On-line: the ECHO Project

by Pasquale Savino

Wide access to large information collections is of great potential importance in many aspects of everyday life. However, limitations in information and communication technologies have, so far, prevented the average person from taking much advantage of existing resources. Historical documentaries, held by national audiovisual archives, constitute some of the most precious and less accessible cultural information. The ECHO project intends to contribute to the improvement of the accessibility to this precious information, by developing a Digital Library (DL) service for historical films belonging to large national audiovisual archives.

The ECHO project, funded by the European Community within the V Framework Program, KA III, intends to provide a set of digital library services that will enable a user to search and access documentary film collections. For example, users will be able to investigate how different countries have documented a particular historical period of their life, or view an event which is documented in the country of origin and see how the same event has been documented in other countries, etc. One effect of the emerging digital library environment is that it frees users and collections from geographic constraints.

The Project is co-ordinated by IEI-CNR. It involves a number of European institutions (Istituto Luce, Italy; Institut Nationale de l’Audiovisuel, France; Netherlands Audiovisual Archive, and Memoriav, Switzerland) holding or managing unique collections of documentary films, dating from the beginning of the century until the seventies. Tecmath, EIT, and Mediasite are the industrial partners that will develop and implement the ECHO system. There are two main academic partners (IEI-CNR and Carnegie Mellon University - CMU) and four associate partners (CNRS-LIMSI, IRST, University of Twente, and University of Mannheim).

ECHO System Functionality

The emergence of the networked information system environment allows us to envision digital library systems that transcend the limits of individual collections to embrace collections and services that are independent of both location and format. In such an environment, it is important to support the interoperability of distributed, heterogeneous digital collections and services. Achieving interoperability among digital libraries is facilitated by conformance to an open architecture as well as agreement on items such as formats, data types, and metadata conventions.

ECHO aims at developing a long term reusable software infrastructure and new metadata models for films in order to support the development of interoperable audiovisual digital libraries. Through the development of new models for film metadata, intelligent content-based searching and film-sequence retrieval, video abstracting tools, and appropriate user interfaces, the project intends to improve the accessibility, searchability, and usability of large distributed audiovisual collections. Through the implementation of multilingual services and cross language retrieval tools, the project intends to support users when accessing across linguistic, cultural and national boundaries. The ECHO system will be experimented, in the first place, for four national collections of documentary film archives (Dutch, French, Italian, Swiss). Other archives may be added in a later stage.

The ECHO System

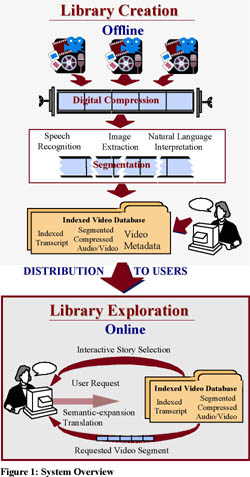

The figure provides an overview of the main operations supported by the ECHO system. ECHO assists the population of the digital library through the use of mechanisms for the automatic extraction of content. Using a high-quality speech recogniser, the sound track of each video source is converted to a textual transcript, with varying word error rates. A language understanding system then analyses and organises the transcript and stores it in a full-text information retrieval system. Multiple speech recognition modules for different European languages will be included. Likewise, image understanding techniques are used for segmenting video sequences by automatically locating boundaries of shots, scenes, and conversations. Metadata is then associated with film documentaries in order to complete their classification.

The figure provides an overview of the main operations supported by the ECHO system. ECHO assists the population of the digital library through the use of mechanisms for the automatic extraction of content. Using a high-quality speech recogniser, the sound track of each video source is converted to a textual transcript, with varying word error rates. A language understanding system then analyses and organises the transcript and stores it in a full-text information retrieval system. Multiple speech recognition modules for different European languages will be included. Likewise, image understanding techniques are used for segmenting video sequences by automatically locating boundaries of shots, scenes, and conversations. Metadata is then associated with film documentaries in order to complete their classification.

Search and retrieval via desktop computers and wide area networks is performed by expressing queries on the audio transcript, on metadata, or by image similarity retrieval. Retrieved documentries, or their abstracts, are then presented to the user. By the collaborative interaction of image, speech and natural language understanding technology, the system compensates for problems of interpretation and search in the error-full and ambiguous data sets.

Expected Results

The project will follow an incremental approach to system development. Three prototypes will be developed offering an increasing number of functionalities. The starting point of the project will be a software infrastructure resulting from an integration of the Informedia and Media Archive(r) technologies.

The first prototype (Multiple Language Access to Digital Film Collections) will integrate the speech recognition engines with the infrastructure for content-based indexing. It will demonstrate an open system architecture for digital film libraries with automatically indexed film collections and intelligent access to them on a national language basis.

The second prototype (Multilingual Access to Digital Film Collections) will add a metadata editor which will be used to index the film collections according to a common metadata model. Index terms, extracted automatically during the indexing/segmentation of the film material (first prototype), will be integrated with local metadata, extracted manually, in a common description (defined by the common metadata model).The second prototype will support the interoperability of the four collections and content based searching and retrieval.

The third prototype (ECHO Digital Film Library) will add summarization, authentication, privacy and charging functionalities in order to provide the system with full capabilities.

Links:

http://www.iei.pi.cnr.it/echo/

Please contact:

Pasquale Savino - IEI-CNR

Tel: +39 050 315 2898

E-mail: P.Savino@iei.pi.cnr.it

The figure provides an overview of the main operations supported by the ECHO system. ECHO assists the population of the digital library through the use of mechanisms for the automatic extraction of content. Using a high-quality speech recogniser, the sound track of each video source is converted to a textual transcript, with varying word error rates. A language understanding system then analyses and organises the transcript and stores it in a full-text information retrieval system. Multiple speech recognition modules for different European languages will be included. Likewise, image understanding techniques are used for segmenting video sequences by automatically locating boundaries of shots, scenes, and conversations. Metadata is then associated with film documentaries in order to complete their classification.

The figure provides an overview of the main operations supported by the ECHO system. ECHO assists the population of the digital library through the use of mechanisms for the automatic extraction of content. Using a high-quality speech recogniser, the sound track of each video source is converted to a textual transcript, with varying word error rates. A language understanding system then analyses and organises the transcript and stores it in a full-text information retrieval system. Multiple speech recognition modules for different European languages will be included. Likewise, image understanding techniques are used for segmenting video sequences by automatically locating boundaries of shots, scenes, and conversations. Metadata is then associated with film documentaries in order to complete their classification.