by Amedeo Cesta, Enrico Giunchiglia, Paola Riccucci, Paolo Traverso

JERRY is a modular system for the interactive design, planning, control and supervision of the operation of autonomous robot systems in space. In such a highly critical environment, JERRY can effectively support the robot operators in both ordinary and emergency situations and make their work easier, safer and faster. JERRY can also provide scientists with no specific competence in robotics with a higher-level support for the automated execution of complex robot activities, with limited contributions from specialized operators.

JERRY has been developed in a collaboration between the Mechanized Reasoning Groups at both the University of Genoa and the Institute for Scientific and Technological Research (IRST), Trento, together with the Institute of Psychology, CNR, Rome, as part of an ongoing and more ambitious project funded by ASI, the Italian Space Agency. In this application, JERRY provides its functionality to different kinds of users who have to design, control and monitor a robot arm performing complex tasks, such as the setting up of experiments in a space workcell.

The system integrates different Artificial Intelligence techniques into an interactive environment to synthesize plans for the robot to execute. The high level goal of the system is to simplify the interaction of users at various levels of expertise with a rather complex robotic device.

JERRY has been designed to enable robot operation in Interactive Autonomy, ie the system can perform all of its tasks autonomously (including recovery from various non-nominal situations), but the user is able to easily monitor and possibly override autonomous operations, in a collaborative fashion. Effectiveness is guaranteed by a set of tightly integrated specialized modules, each dedicated to a specific task. Interactive autonomy is attained through a user-centered architecture, where the user asks for services from the specialized modules.

The key AI feature of the project lies in the definition of an experiment as a planning problem, which is then processed by the Planning and Execution modules.

The Planning Module requests a high-level description of the task to be performed by the robot and performs the synthesis of an equivalent abstract plan (in a user-oriented symbolic language), ie a sequence of high-level actions for the robotic system to execute.

The Execution Module transforms an abstract plan (describing the given task as a sequence of high-level actions) into an executable plan, where high-level actions are expressed in terms of the basic actions the robot system can perform, taking into account constraints related to the geometry and physics of the robot system and its operating domain. The executable plan is then encoded into the robot’s control language, thus generating an executable code in system-specific language. The resulting code can be visually validated by submitting it to the Software Simulator of the robotic system.

Prototype

At present, a complete prototype has been developed that considers the SPIDER arm developed by ASI as the target robotic device. The system is able to synthesize low level programs coded in PDL2, the SPIDER command language.

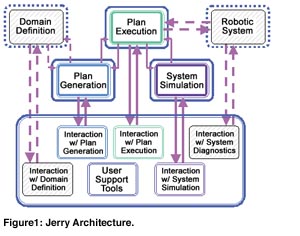

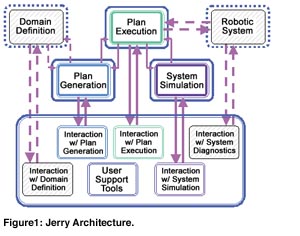

From a software perspective, JERRY features a client/server architecture as shown in Figure 1. Each specialized module (top) is made accessible remotely (via TCP-IP) as a server. The User Interaction Module (bottom) acts as a client, connecting to the appropriate server at each step of the experiment’s lifecycle and requesting its processing services.

Such an architecture results in a rather ‘lightweight’ Interaction Module, thus enabling users to run the earlier stages of development on computers of limited resources, such as laptops; for a wider portability, the module is written in Java.

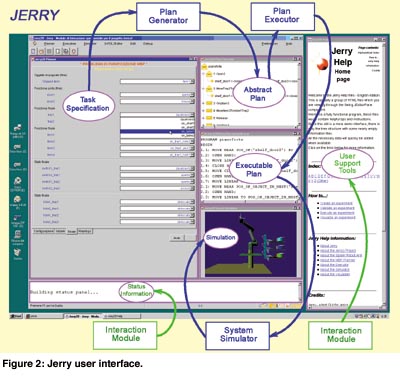

Figure 2 shows JERRY as seen by the user, through its Interaction Module. In the main window (left), four viewports show the resources of the specialized servers: the planning problem specification window (left); the plan in execution (top-right); the PDL2 code corresponding to the action being executed (middle-right); and the visual simulation of the plan coming from the simulator (bottom-right). At any moment, for better readability, users can move the module on which their attention is focused to the main viewport. At the bottom of the main window, a Console Area shows information on the status of communications with the remote servers and the data exchanged.

The HTML User Guide (right) appears in a separate window. It can be also viewed independently of the User Interaction Module, through any HTML browser.

Future Developments

Although the project is still running, a first working prototype is now available for experimentation. The current scenario concerns the SPIDER arm extracting a tray from a shelf, fixing it to one of two tables and then automatically performing experiments moving objects contained in the tray.

Further development will enhance the capabilities of the planning problem specification window. While the current design is centered on the selection of text parameters, the next release will enable users to specify all the settings via direct manipulation of graphical objects. Further work will be aimed at making the whole architecture completely domain-independent, in order to obtain a powerful tool for robot software development and verification, starting from a high level specification language.

Links

http://pst.ip.rm.cnr.it/projects/jerry.htm

Please contact:

Amedeo Cesta - IP-CNR

Fax: +39 06 824 737

E-mail: cesta@ip.rm.cnr.it