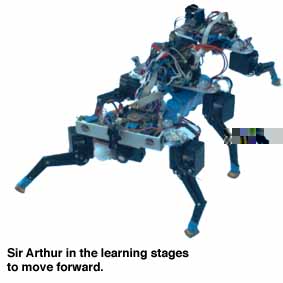

Sir Arthur - Learning to walk on six Legs

by Frank Kirchner

The objective of the Sir Arthur project is to demonstrate the application of Reinforcement Learning to a multi-degree of freedom walking machine. It is shown how learning is used at the elementary level for basic swing and stance motion, required to achieve the simple walking of one leg. Following this, a more complex, higher level behaviour is learned involving the coordination of each leg to achieve an overall forward motion. Finally, these behaviours are applied to a goal orientated objective where Sir Arthur is required to find areas of maximum light intensity.

16 motors are used to provide swing and stance for each of the six legs and two degrees of freedom for each of the two joints. This allows Sir Arthur to lift its head and look around to re-orientate relative to a light source. The forward movement on six-leg machines is achieved by swinging three legs to the front while at the same time performing a stance movement on the others.

Sir Arthur is build in three segments: Each segment comprises two legs. The segments are connected by two actively controllable degree-of-freedom joints. The joints enable Sir Arthur to raise and pivot the front and backsegments, thereby allowing for 3D movements. On each segment, there is a microprocessor to perform the basic control tasks. An additional microcontroller, the master controller is installed in the middle segment to perform higher level processing tasks. The master and the slave controller use a bidirectional 8 bit parallel link for communication. The master sends commands to the slaves to perform elementary swing and stance behaviours while it receives status information and global sensor data.

Reinforcement Learning was implemented to learn a trajectory in the

state space that is formed by the possible positions of the legs. The reinforcement

to the robot is computed from the difference between a reference trajectory

and the currently performed movement. Using this reinforcement, the system

learns to perform the correct sequence of actions to swing and stance one

leg.

Reinforcement Learning was implemented to learn a trajectory in the

state space that is formed by the possible positions of the legs. The reinforcement

to the robot is computed from the difference between a reference trajectory

and the currently performed movement. Using this reinforcement, the system

learns to perform the correct sequence of actions to swing and stance one

leg.

More difficult to learn is the coordination of the individual legs to achieve a more complex behaviour such as walking forward. In this case, Reinforcement Learning was also used to accomplish this task. A technique called Experience Replay was used to speed up the learning. Experience Replay stores successful sequences and uses them as a bias during later learning stages.

After long sequences of unsuccessful trials, first signs of coordinated, though still wrong, behaviour can be observed. As coordination increases, the robot performs more and more forward steps until the correct coordination scheme is finally found.

The most interesting applications for autonomous mobile robots are those which require a high kinematic complexity of the robot. Examples are the exploration of remote planets, the inspection of rough terrain, destroyed or contaminated areas. However, the complex kinematics of the robot require methods to control the system which are not offered by classic control theory. Methods provided by Artificial Intelligence are not only useful to solve such problems; currently, they are the only ones which can be applied successfully. Information on Sir Arthur on the Web at: http://www.gmd.de/People/Frank.Kirchner/sir.arthur.html

Please contact:

Frank Kirchner - GMD

Tel: +49 2241 14 2402

E-mail: frank.kirchner@gmd.de