| |

What We See is What We Compute

by Jan-Mark Geusebroek and Arnold W.M. Smeulders

Understanding human vision is an intriguing challenge. Vision dominates our senses for personal observation, societal interaction, and cognitive skill acquisition. At the Intelligent Sensory Information Systems group of the University of Amsterdam, we have been researching computer vision and content-based multimedia retrieval for some time, and have recently started a new line of research into computational models for visual cognition.

Cognitive vision as defined by the ECVision research network is the processing of visual sensory information in order to act and react in a dynamic environment. The human visual system is an example of a very well-adapted cognitive system, shaped by millions of years of evolution. Since vision requires 30% of our brain capacity, and what is known about it points to it being a highly distributed task interwoven with many other modules, it is clear that modelling human vision - let alone understanding it - is still a long way off. It is also clear that there is a close link between vision and our expressions of consciousness, but statements such as this add to the mystery rather than resolving it.

Understanding visual perception to such a level of detail that a machine could be designed to mimic it is a long-term goal, and one which is unlikely to be achieved within the next few decades. However, as computers are expected in the next ten years to reach the capacity of the human brain, now is the time to start thinking about methods of constructing modules for cognitive vision systems.

For both biological and technical systems, we are examining which architectural components are necessary in such systems, and how experience can be acquired and used to steer perceptual interpretation. Since human perception has evolved to interpret the structure of the world around us, a necessary boundary condition of the vision system must be the common statistics of natural images.

Neurobiological studies have found a dozen or so different types of receptive fields in the visual system of primates. As the receptive fields have evolved to capture the world around us, they are likely to be dual to our physical surrounding. These fields must be derived from the statistical structures that are probed in visual data by integration over spatial area, spectral bandwidth and time. In our cognitive vision research, we have initially derived several receptive field assemblies, each characterising a physical quantity from the visual stimulus.

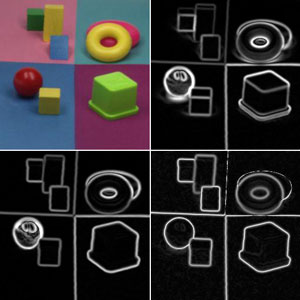

As the visual stimulus involves a very reductive projection of the physical world onto a limited set of visual measurements, only correlates to relevant entities can be measured directly. Invariants transform visual measurements to true physical quantities, thereby removing those degrees of freedom not relevant for the observer. Hence, a first source of knowledge involved in visual interpretation is the incorporation of physical laws. In the recent past, we have used colour invariance as a well-founded principle to separate colour into its correlates of material reflection, being illuminant colour, highlights, shadows, shading components and the true object reflectance. Such invariants allow a system to be sensitive to obstacles, while at the same time being insensitive to shadows. The representation of the visual input into a plurality of invariant representations is a necessary information-reduction stage in any cognitive vision system.

|

| Figure 1: Invariants for color separating shadow (b), highlights (c), and true object contours (d) from all contours (a) (Courtesy of Theo Gevers, University of Amsterdam.) |

|

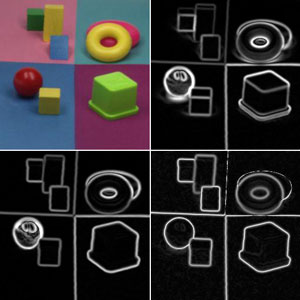

| Figure 2: Focal attention at regions where spatial statistics break with the generally observed statistics in natural scenes, that is, at regular patterns. |

To limit the enormous computational burden arising from the complex task of interpretation and learning, any efficient general vision system will ignore the common statistics in its input signals. Hence, the apparent occurrence of invariant representations decides what is salient and therefore requires attention. Such focal attention is a necessary selection mechanism in any cognitive vision system, critically reducing both the processing requirements and the complexity of the visual learning space, and effectively limiting the interpretation task. Expectation about the scene is then inevitably used to steer attention selection. Hence, focal attention is not only triggered by visual stimuli, but is affected by knowledge about the scene, initiating conscious behaviour. In this principled way, knowledge and expectation may be included at an early stage in cognitive vision. In the near future, we intend to study the detailed mechanisms behind such focal attention mechanisms.

Links:

http://www.ecvision.info/home/Home.htm

http://www.science.uva.nl/~mark/

Please contact:

Jan-Mark Geusebroek and Arnold W.M.

Smeulders, University of Amsterdam

Tel: +31 20 525 7552

E-mail: {mark,smeulders}@science.uva.nl

|