This issue in pdf Archive: Next issue: Next Special theme: |

|

|||||||||||||

Towards Tele-Presence: Combining Ambient Awareness, Augmented Reality and Autonomous Robotsby Pieter Jonker and Jurjen Caarls A setup for a mobile user employing Augmented Reality for indoor and outdoor applications has been developed at Delft University of Technology. To determine the user's position and orientation in the world, it uses a cognition system similar to that used by our autonomous soccer playing robots. In order to let robot and man cooperate, a knowledge system shared by man and robot is under development, in which both can express their understanding of the 'world' as they perceive it. Applications include rescue operations in hazardous situations. The proliferation of digital communication systems, infrastructures, and services will eventually lead to a Personal Communicating Digital Assistant (PCDA): a hybrid between mobile phones and Personal Digital Assistants with ubiquitous computing capabilities. A PCDA possesses Ambient Awareness: the ability to acquire, process, and act upon application specific contextual information, taking the current user preferences and state of mind into account. A major issue of Ambient Awareness is positioning: the device must know where it is. For outdoor navigation DGPS is a good candidate to detect one's position. Indoors it is easier to set up a network of beacons. Radio beacons can realize good accuracies. An alternative is to use a camera and a model of the world. From features in the image a position can be calculated and tracked, in the cm range. Such features (visual beacons) can be nameplates on doors, or dot patterns fixed at known positions in a building.

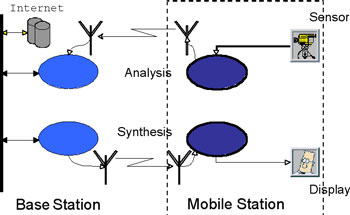

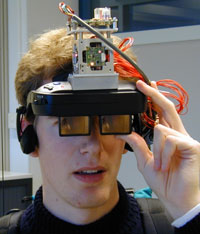

A PCDA also employs Augmented Reality: a context aware application (Figure 1) which merges virtual audio-visual information with the real world using a see-through display (Figure 2). Outdoor DGPS or indoor a beacon system captures the user's rough position. A camera captures the user's environment, which, combined with gyroscopes, accelerometers, and compass, makes the PCDA fully aware of the user's absolute position and orientation with such an accuracy that virtual objects can be projected over the user's real world, without causing motion sickness. Camera images are sent to the backbone and matched to a 3D description of the environment derived from a GIS database of the environment, to determine the user's position.

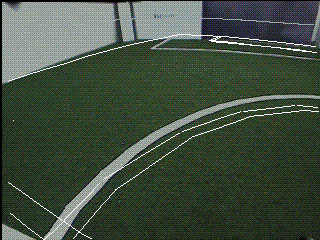

The fusion of the sensors, each with their own accuracy, update rate and latency, is based on Kalman filtering. With our combined inertia and visual tracking system we are able to track head rotation and position with an update rate of 10 ms with an accuracy for the rotation of about 2 degrees, whereas head position accuracy is in the order of a few cm at a visual cue distance of less than 3 m. In our experimental set-up a background process, once initiated by the DGPS system, continuously looks in the image for visual cues and - when found - tries to track them, to continuously adjust the drift in the inertial sensor system (Figure 3). Our set-up is a context aware system that can run on future generation PCDAs for professional use. Low-cost versions can be derived with camera and some position sensing devices, but without Augmented Reality. This lowers the requirements for the positioning accuracy and update rate drastically. In such a consumer version the camera can be used to realize the awareness of the user's position (where is he/she?), the user's attention (what is the user looking at, pointing at, maybe thinking about?), and the user's wishes (what is the problem, what information is needed?).

The annual world championship Robot Soccer (see Figure 4) is organized by the international RoboCup organization (www.robocup.org). One of the most important features of the robots is their cognition system. The determination of the robot's own position is based on its odometry augmented with its visual system that, based on field lines and cues such as the goal colours, can continuously calibrate the odometry. In about ten years walking robots (the Humanoid League) will take the lead.

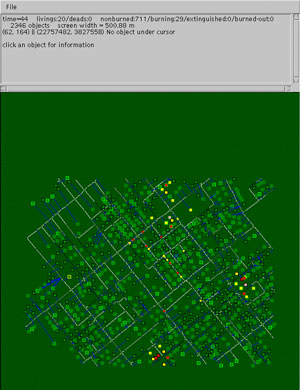

In a second domain of RoboCup, in the Rescue Simulation League, the challenge is to rescue as many civilians and buildings as possible after a disaster in a city, such as the Kobe earthquake (Fig. 5). This agent based simulation contest is a preparation for the real Rescue Robot troops. One can think of, in analogy with a dog brigade in a police force, a troop of robots under command of the fire brigade that helps to discover and save injured, to discover and extinguish fires and help to set-up communication and information spots.

Of crucial importance is, how the robots in the simulation build up their vision on the world (E-Semble, see www.e-semble.com) and how they can share information using limited communication channels (Figure 6). This is an extended problem of the real robots playing soccer, with the difference that in a simulated world only symbolic knowledge need to be treated, whereas in a real world also perceptions play a role. As with humans, perceptions of distance, size and shape are inaccurate, because the goal is not to obtain an accurate scene description and measurement, but rather acquisition of task specific information for object recognition, obstacle avoidance and object handling. We have realised an Augmented Reality system, a team of autonomous soccer playing physical robots and are involved in the simulation of disasters. If Autonomous Robots, Ambient Awareness and Augmented Reality devices become more mature, a quite natural step would be to let the humans work side to side with robots, eg for search and rescue. The humans, fitted with Augmented Reality PCDAs, could be made to perceive what the robots perceive on far more dangerous grounds, ie, tele-presence. This, however, requires a mutual knowledge system for symbolic knowledge (facts) as well as perceptual knowledge. The symbolic knowledge must contain data descriptions of fixed and dynamic objects, their attributes and the relations between the objects. The perceptions on objects must include more vague descriptions, eg, based on support vector data descriptions of the objects. As the system covers a dynamically changing environment, it must be able to learn and forget symbolic knowledge as well as perceptions. Crucial is that the system must be able to ground perceptions to symbols (ie label it and relate it to facts). For example a vague black blob, perceived by some robots can be labelled 'door' by a human, whereafter the robots can use this fact in their world model. Although at this moment there exist many components of systems that maintain a world, their integration for tele-presence is ongoing. This work is performed in cooperation with Delft University of Technology (faculty ITS; Augmented Reality), the Telematics Institute Enschede (Ambient Awareness), the University of Amsterdam (faculty of Science; Robot Soccer) and E-Semble (Rescue Simulation). Please contact: |

|||||||||||||