ERCIM News No.44 - January 2001 [contents]

![]()

ERCIM News No.44 - January 2001 [contents]

by John Dingliana and Carol O’Sullivan

The demand for realism in interactive virtual environments is increasing rapidly. However, despite the recent advances in computing power, it is necessary to make certain simplifications, sacrificing accuracy in order to meet the demands of real-time animation. An ongoing project at the Image Synthesis Group (ISG) in Trinity College Dublin (TCD) addresses the problem of optimizing this speed-accuracy trade-off by using adaptive processes for computationally intensive tasks such as Collision Detection.

Physically-based modelling concerns itself with emulating the properties and behaviours of objects in the real-world. Objects in a physically based virtual environment interact with each other based on rules that are modelled on the laws of physics. Unfortunately, given the finite nature of machines in comparison to the infinite complexity of the physical world, we must always accept that no matter how detailed our model, it will be at best an approximation. This is particularly true in the case of real-time animation where the requirement is to produce frames on the fly at a rate of at least 20 per second. Current work at TCD addresses this problem by using adaptive, time-critical algorithms to deal with some of the more computationally-intensive processes in the production of an animation.

A naive approach to ensure real-time frame rates would be to simplify the scene to such a degree that it would always be possible to perform the necessary calculations involved in producing each frame of an animation. However, it is frequently the case, particularly in interactive animations, that the complexity of a scene will change significantly over time. Such pre-emptive simplification, based no doubt on worst case scenarios, is usually excessive as we would end up simplifying the entire animation for the sake of what is likely to be relatively fewer snapshots of high complexity. It would be more desirable to be able to dynamically adapt levels of simplification based on the workload at every stage of the animation. Even better would be if we could strategically simplify, in every frame, certain parts of the scene that were less important.

One consistently time-consuming part of physically-based animation is detecting and handling the case where two or more objects in the simulated world come into contact. It is often through such events that the user is able to interact, directly or indirectly, with the environment. Thus, it is important that the effect of such events is modelled accurately as it will significantly impact upon the believability of the animation from the user’s perspective. On the other hand, such events are not only quite frequent but also require a large amount of processing time to deal with appropriately. It seems apparent that collision handling is one of those processes where significant gains can be made by carefully balancing the speed-accuracy trade-off.

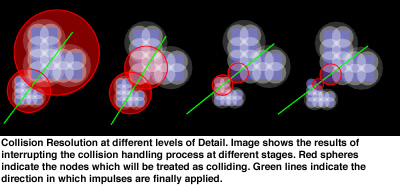

We employ an approach which uses hierarchical multi-resolution volume models to represent objects in the scene. This is an approximation of an object’s volume at different levels of detail and is separate from the model used in rendering the object. The volume model is used instead in calculations involving the objects state and behaviour in the virtual world. Typically, it consists of unions of simpler volumes (eg spheres) which are stored in hierarchical tree structures so that it is possible to localize which part of colliding objects are touching. The process of collision detection involves intersection tests using the volume models at different levels of detail, traversing the volume tree to get a better approximation of the actual contact points. Interrupting this tree traversal at different stages allows us to obtain approximations of the contact at different levels of accuracy. Thus, we can not only process individual frames but also individual objects in a single frame at different levels of detail.

Collisions in a scene are prioritised based on their potential effect on the believability of the animation. A scheduler deals with collisions based on their perceptual importance, allocating more time to the processing of higher priority collisions. Criteria for judging the importance of particular objects are based on paychophysical tests to determine the effects of states and conditions which might affect user-perception of collisions.

Ongoing Work

A library for adaptive collision detection and response entitled ReACT (Real-time Adaptive Collision Toolkit) is currently under construction and will allow the low level procedures for collision handling to be used in more specific applications. Extensive user-tests have been performed to determine user perception of collisions, and an eye-tracking device is being used to track which collisions a user is looking at. Further user tests will be undertaken with the specific goal of determining the perception of collision response. More efficient volume representation schemes using heterogeneous unions of subvolumes are also being investigated. All of this goes towards the goal of optimising the speed accuracy trade-off whilst guaranteeing real-time animation.

Links:

http://isg.cs.tcd.ie/

Please contact:

John Dingliana - Trinity College Dublin

Tel: +353 1 608 3679

E-mail: John.Dingliana@cs.tcd.ie