Task Parallelism in an HPF Framework

by Raffaele Perego and Salvatore Orlando

High Performance Fortran (HPF) is the first standard data-parallel language. It hides most low-level programming details and allows programmers to concentrate on the high-level exploitation of data parallelism. The compiler automatically manages data distribution and generates the explicit interprocessor communications and synchronizations needed to coordinate the parallel execution. Unfortunately, many important parallel applications do not fit a pure data-parallel model and can be more efficiently implemented by exploiting a mixture of task and data parallelism. At CNUCE-CNR in Pisa, in collaboration with the University of Venice, we have developed COLThpf, a run-time support for the coordination of concurrent and communicating HPF tasks. COLThpf provides suitable mechanisms for starting distinct HPF data-parallel tasks on disjoint groups of processors, along with optimized primitives for inter-task communication where data to be exchanged may be distributed among the processors according to user-specified HPF directives.

COLThpf was primarily conceived for use by

a compiler of a high-level coordination language to structure a set of

data-parallel HPF tasks according to popular task-parallel paradigms such

as pipelines and processor farms. The coordination language supplies a

set of constructs, each corresponding to a specific form of parallelism,

and allows the constructs to be hierarchically composed to express more

complicated patterns of interaction among the HPF tasks. Our group is currently

involved in the implementation of the compiler for the proposed high-level

coordination language. This work will not begin from scratch. An optimizing

compiler for SKIE (SKeleton Integrated Environment), a similar coordination

language, has already been implemented within the PQE2000 national project

(see ERCIM News No. 24). We only have to extend the compiler to support

HPF tasks and provide suitable performance models which will allow the

compiler to perform HPF program transformations and optimizations. A graphic

tool aimed to help programmers in structuring their parallel applications,

by hierarchically composing primitive forms of parallelism, is also under

development.

COLThpf was primarily conceived for use by

a compiler of a high-level coordination language to structure a set of

data-parallel HPF tasks according to popular task-parallel paradigms such

as pipelines and processor farms. The coordination language supplies a

set of constructs, each corresponding to a specific form of parallelism,

and allows the constructs to be hierarchically composed to express more

complicated patterns of interaction among the HPF tasks. Our group is currently

involved in the implementation of the compiler for the proposed high-level

coordination language. This work will not begin from scratch. An optimizing

compiler for SKIE (SKeleton Integrated Environment), a similar coordination

language, has already been implemented within the PQE2000 national project

(see ERCIM News No. 24). We only have to extend the compiler to support

HPF tasks and provide suitable performance models which will allow the

compiler to perform HPF program transformations and optimizations. A graphic

tool aimed to help programmers in structuring their parallel applications,

by hierarchically composing primitive forms of parallelism, is also under

development.

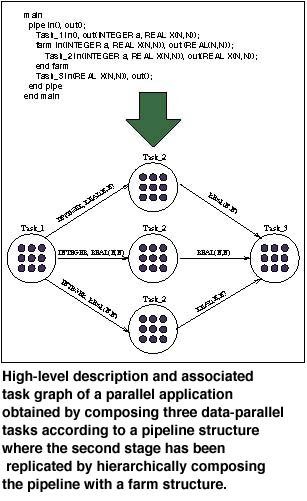

The figure shows the structure of an application obtained by composing three data-parallel tasks according to a pipeline structure, where the first and the last tasks of the pipeline produce and consume, respectively, a stream of data. Note that Task 2 has been replicated, thus exploiting a processor farm structure within the original pipeline. In this case, besides computing their own jobs, Task 1 dispatches the various elements of the output stream to the replicas of Task 2, while Task 3 collects the elements received from the replicas of Task 2. The communication and coordination code is however produced by the compiler, while programmers have only to supply the high-level composition of the tasks, and, for each task, the HPF code which transforms the input data stream into the output one. The data type of the channels connecting each pair of interacting tasks is also shown in the figure. For example, Task 2 receives an input stream, whose elements are pairs composed of an INTEGER and an NxN array of REAL's. Of course, the same data types are associated with the output stream elements of Task 1. Programmers can specify any HPF distribution for the data transmitted over communication channels. The calls to the suitable primitives which actually perform the communications are generated by the compiler. Note that communication between two data-parallel tasks requires, in the general case, P*N point-to-point communications if P and N are the processors executing the source and destination tasks, respectively. The communication primitives provided by our run-time system are however highly optimized: messages are aggregated and vectorized, and the communication schedules, which are computed only once, are reused at each actual communication.

COLThpf is currently implemented on top of Adaptor, a public domain HPF compilation system developed at GMD-SCAI by the group lead by Thomas Brandes, with whom a fruitful collaboration has also begun. The preliminary experimental results demonstrate the advantage of exploiting a mixture of task and data parallelism on many important parallel applications. In particular we implemented high-level vision and signal processing applications using COLThpf and in many cases we found that the exploitation of parallelism at different levels significantly increases the efficiencies achieved.

Please contact:

Raffaele Perego - CNUCE-CNR

Tel: +39 050 593 253

E-mail: r.perego@cnuce.cnr.it

Salvatore Orlando - University of Venice

Tel: +39 041 29 08 428

E-mail: orlando@unive.it